Thank you! Your submission has been received

Samson Aligba

We are currently witnessing a massive misallocation of engineering effort in the AI space.

Everywhere you look, developers are trying to force Large Language Models (LLMs) to perform tasks they are inherently bad at. We try to make them generate pixel-perfect UI designs, write complex SQL database queries, or control rigid external systems directly. And when they inevitably hallucinate or fail, we blame the model: "It’s not smart enough yet."

My thesis is different: The model is fine. The interface is wrong.

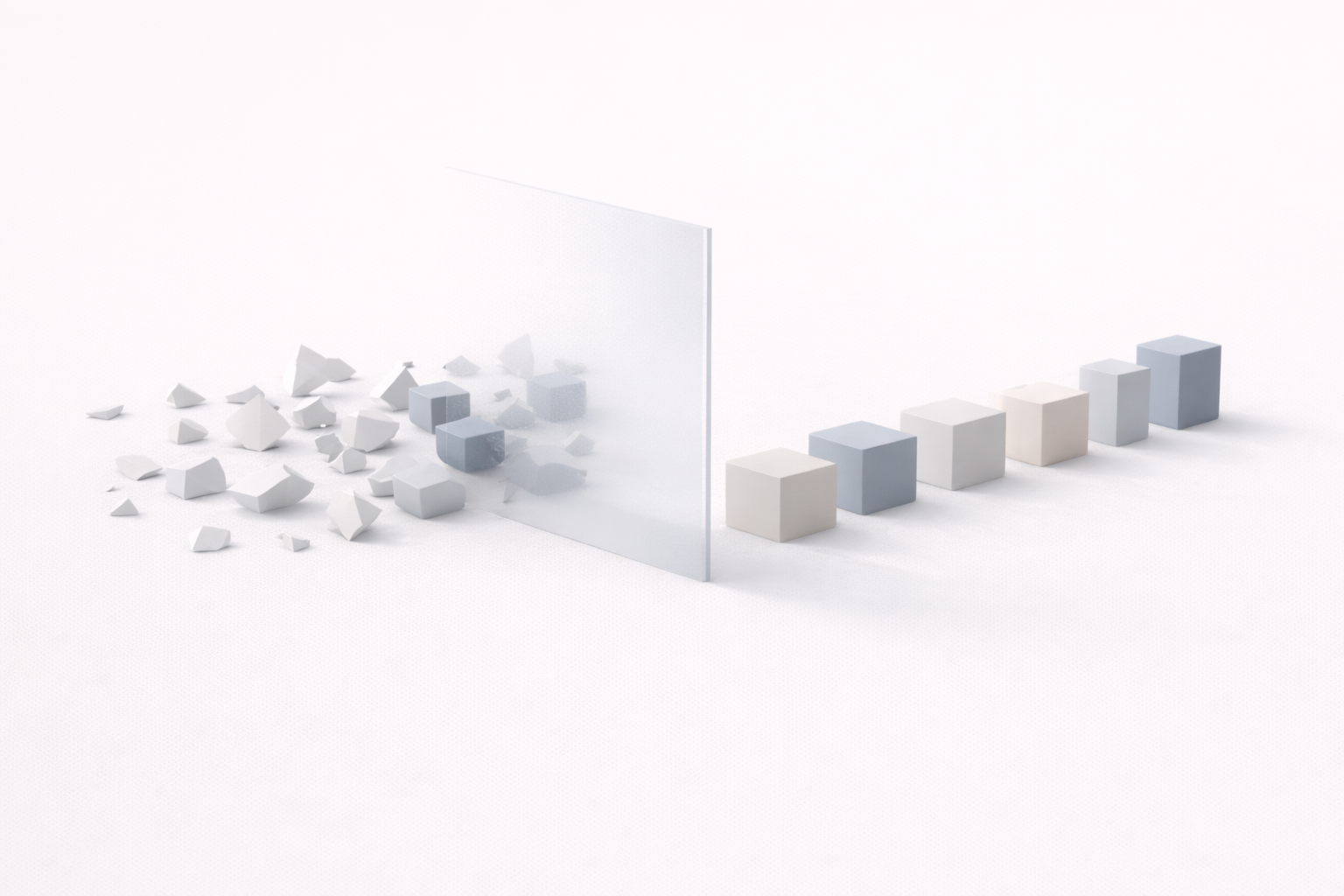

We are trying to skip a step. We are asking a probabilistic word engine to perform deterministic actions. While frameworks like OpenAI’s Function Calling, Anthropic’s Tool Use, and LangChain have made massive strides in structuring model outputs, they often result in brittle, imperative "JSON blobs" that are difficult for humans to audit and fragile to execute.

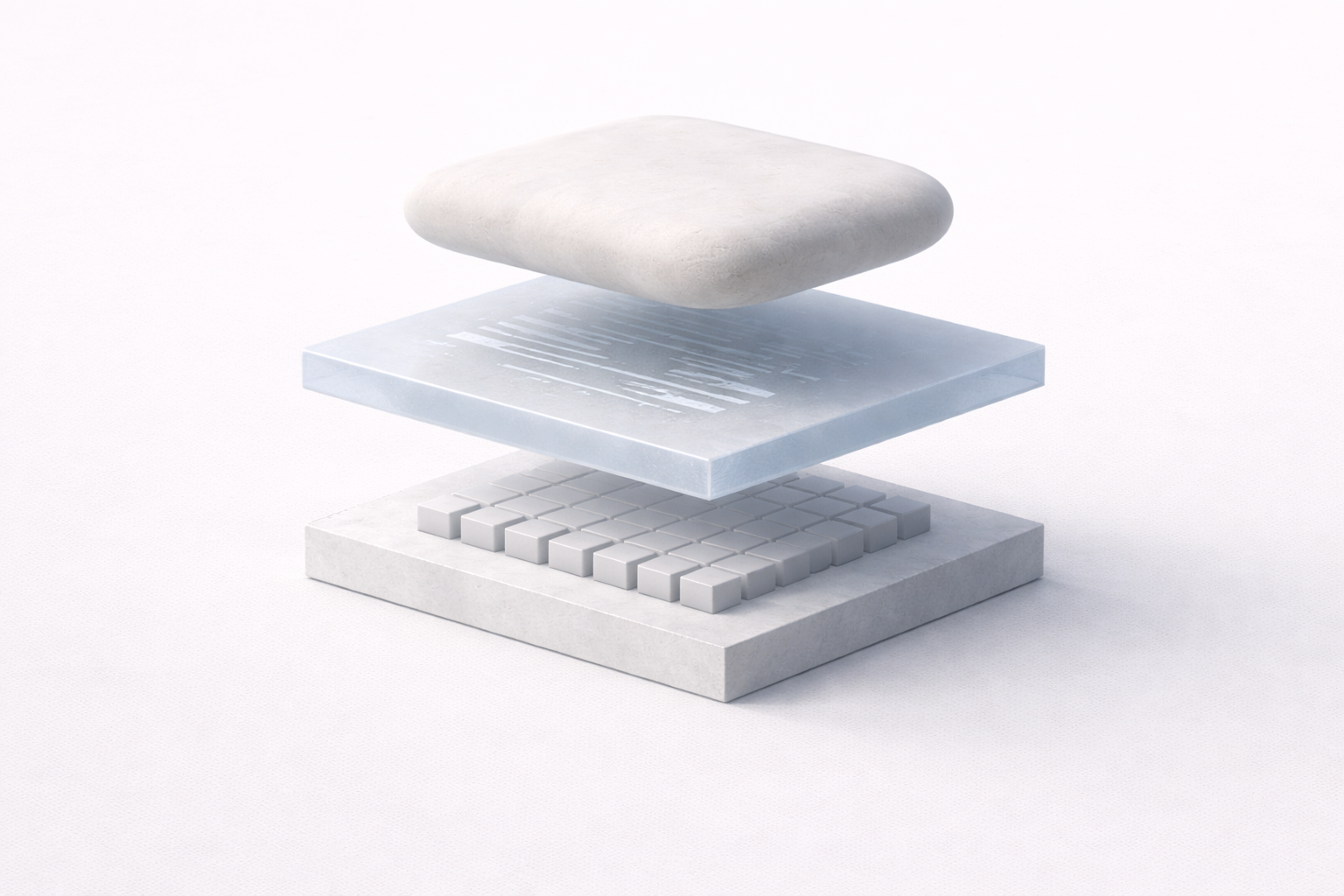

The missing layer in the Agentic World isn't a smarter model or a larger context window. The missing layer is the Domain Specific Language (DSL).

We need to treat the interface between LLMs and Software not just as a data pipe, but as a distinct Intermediate Representation (IR)—a bridge designed to be written by machines but read by humans.

Beyond Function Calling: The "Authoring" Paradigm

The industry’s current solution to "getting LLMs to do things" is Function Calling. This works wonderfully for discrete, atomic actions: get_weather(city="London").

But for complex, creative, or multi-step workflows—like generating a video, designing a dashboard, or provisioning cloud infrastructure—Function Calling breaks down. It asks the LLM to predict the final executable state in one shot.

The Failure Case

Consider an agent tasked with generating a 30-second marketing video.

Without a DSL: The agent attempts to generate a 500-line JSON payload describing every frame, hex code, and transition timing. If the model hallucinates a single invalid parameter on line 420 (e.g.,

easing: "super-fast"instead ofease-in), the API rejects the entire payload. The error message is cryptic, and the agent has to re-generate the whole blob, likely making a new error elsewhere.With a DSL: The agent writes a 10-line script describing the intent. If line 3 is invalid, the compiler throws a specific error: "Line 3: 'super-fast' is not a valid easing curve. Did you mean 'urgent'?" The agent (or a human) can fix just that line.

The Solution: Shift from Action to Authoring

Instead of asking the LLM to execute the work directly against an API, we should ask the LLM to write a script that describes the work.

Abstraction Density, Not Just Syntax

A common counter-argument is: "JSON is a text format too. Why create a new language?"

This misses the point. The value of a DSL isn't that it avoids curly braces; it's that it forces Domain-Specific Abstraction.

Standard APIs are often verbose because they expose mechanism. A raw video API might require 50 parameters to animate a title (x, y, opacity, kerning, ease-in, ease-out, duration). A well-designed DSL exposes intent. It compresses those 50 mechanical parameters into one semantic concept: mood: confident.

Whether you express this in JSON ({"mood": "confident"}) or a custom text format (mood: confident) is secondary. The win comes from having mood as a first-class primitive that the LLM can reason about, rather than asking it to hallucinate the mathematics of a cubic-bezier curve.

Why This Matters: Solving the "Black Box" Problem

Moving to an Intermediate Representation solves two specific engineering problems that "more intelligence" cannot fix.

1. The "Human-in-the-Loop" Becomes Real

If an agent generates a massive JSON payload to configure a server and makes a mistake, the human operator has to debug a raw data structure. It is painful and error-prone.

If the agent generates a distinct, readable language, the output becomes editable. If the LLM misspells a title or chooses the wrong chart color, the human can simply edit the text file. The "Language Layer" gives humans a handle to grab onto. It transforms AI output from a "take it or leave it" binary into a collaborative draft.

2. Solving Capability Hallucination

We must distinguish between two types of hallucinations:

Fact Hallucination: The model says revenue was $10M when it was $5M. (DSLs do not fix this).

Capability Hallucination: The model tries to perform an action that doesn't exist, or combines parameters that shouldn't go together.

A DSL acts as a Policy Engine. If the LLM writes mood: aggressive and your DSL compiler only supports confident and calm, the compiler rejects it before execution. You can enforce business logic constraints (e.g., "Logos must always be in the bottom right") in the compiler, guaranteeing that even if the LLM "drifts," the final output remains compliant.

When to Use This Pattern

This approach is not a silver bullet. Designing a DSL is expensive. Here is a simple heuristic for when to invest in it:

Use Function Calling if your domain has < 10 distinct actions and they rarely combine (e.g., "Turn on light", "Play music", "Add to calendar"). The state space is small, and the actions are atomic.

Use a DSL if you are building a system where users might chain primitives in unpredictable ways (e.g., "Create a video scene with a chart, then a transition, then a text overlay that reacts to the music"). If the value of your software comes from compositionality, you need a language.

The "Bootstrapping" Problem

We must be realistic: Designing a good DSL is incredibly hard.

It is not as simple as "telling the LLM to write code." You face the "Bootstrapping Problem":

Expressiveness vs. Learnability: If your language is too simple, it can't do enough. If it's too complex, the LLM will struggle to learn the syntax without massive fine-tuning.

The "Uncanny Valley" of Syntax: LLMs are trained on Python, SQL, and HTML. If your DSL looks almost like Python but behaves differently, the model will constantly regress to Python syntax.

Engineering Overhead: You are no longer just building an app; you are building a compiler, a parser, and a renderer.

But for domains that require compositionality, creativity, or strict governance, the DSL pattern is unmatched. It solves the interface between intent and execution—not the equally hard problems of longitudinal memory or multi-step planning, but a foundation where those solutions have somewhere solid to land. It moves us away from the fragile world of "Prompt and Pray" and toward a future where Agents are not just black-box executors, but auditable authors that speak a language we can understand, trust, and control.